ICICI Bank's telephone service identifies account holders via voice recognition. The accelerator pedal in the Mercedes-Benz S550 Hybrid vibrates when driving mode is about to switch from battery to gas. RideOn augmented reality ski goggles project digital information in front of the wearer's eyes.

Three recently launched innovations. Three glimpses of a trend that will reshape the way people interact with technology – and with brands.

Mobile changed everything. Mobile changed nothing.

Don't worry, you don't have to choose – both are true ;)

It feels like it’s been a lifetime since smartphones started radically transforming the lives of people across the globe.

OK, it’s about eight years. Still, now that many of the behaviors around mobile are firmly established – and highly visible – it’s possible to take a step back and assess the impact of this revolution.

One way of looking at all this? Yes, mobile has been a revolution in myriad ways, including the explosion in the volume of content available to on-the-go consumers. But the human experience of reading on a mobile device?

Still holding a rectangle. Still looking down.

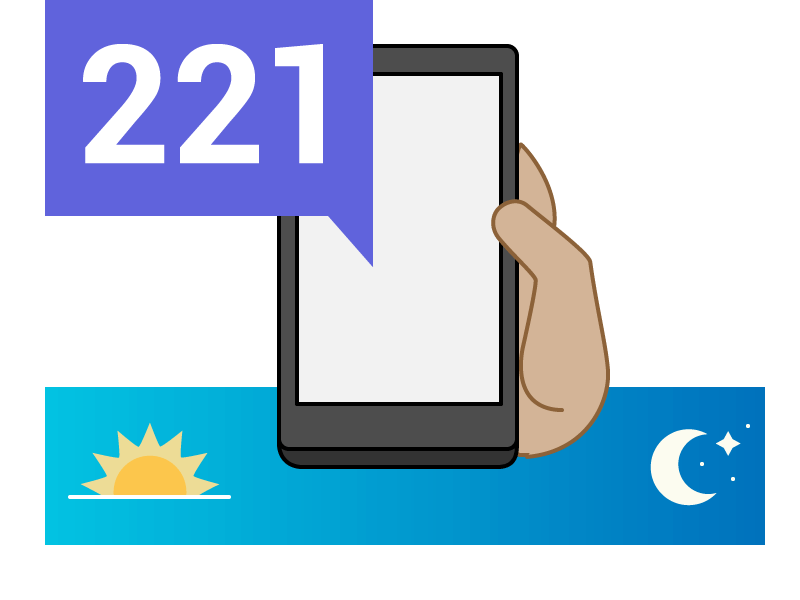

The average smartphone user checks their phone 221 times a day.

Tecmark, October 2014

That's why your (useful, entertaining, informative, utterly addictive) phone is ruining your life.

Or, at least, that's how it's starting to feel for many consumers.

The volume of information and digital task-management – news alerts, WhatsApp messages, Uber arrival notifications, viral videos, and on, and on – streaming at consumers today is vast.

But no, this is not simply about too much information: after all, apps can be deleted and behaviors can be stopped – if that’s what a user wants.

Rather, the digital revolution has made available too much truly great information and functionality.

Connected people – they, you, all of us – are trapped in a paradox: the digital information and functionality that we love is becoming so behaviorally, socially and cognitively intrusive that it’s starting to impact on our relationships, productivity, ability to concentrate – we could go on. And yet we still want more!

No wonder rising numbers of consumers are looking for a new approach.

Truly natural, intuitive interaction with technology has always been the dream. That is, interaction so natural, so human, that it needs NO INTERFACE.*

So what’s different now? In 2016 NO INTERFACE will finally become a reality, thanks to the powerful convergence of primed user expectation and new technologies that are both fueling and serving the need for more natural interactions with tech.

*This Briefing wouldn’t be complete without a nod to Golden Krishna, who coined the phrase, and literally wrote the book on, NO INTERFACE technologies.

Now, a whole host of (next generation, mobile first, emerging market) innovators are applying this trend. In March 2015, Alibaba CEO Jack Ma demonstrated technology that will allow users to pay 'selfie style' using smartphone face recognition.

First, users are primed and ready.

Heavy (and rising) device use, digital services that run in the background, and voice search are all priming users to demand NO INTERFACE solutions.

So why are NO INTERFACE interactions becoming a real concern for ordinary users (rather than just a tech-geek fantasy)?

Consider: time spent on devices is still rising. Across 30 markets, time spent on mobile devices is at an average of 1.35 hours per day, up from 40 minutes in 2012 (GlobalWebIndex, January 2015).

Something has to give. Consumers know that if they are to continue – indeed, intensify – their love with tech, they need newer, faster and more intuitive ways to interact with it.

Meanwhile, the mainstreaming of contextual, anticipatory services (such as Google Now) has made smartphone users accustomed to the idea that digital services should be unobtrusive. Combined with the rise of effective voice interaction, that has primed device users to expect more NO INTERFACE innovation.

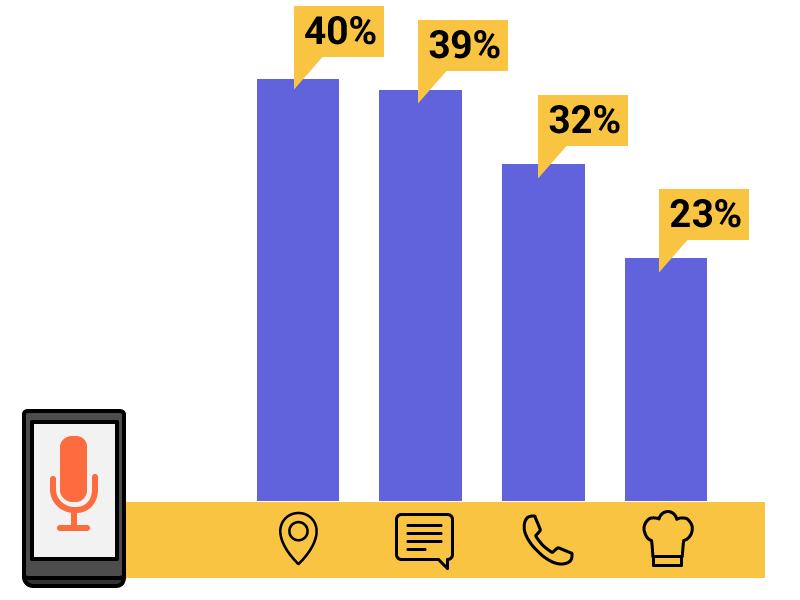

40% of adult smartphone owners use voice search to ask for directions, 39% to dictate a text message, 32% to make a phone call, 23% while they are cooking.

Google Mobile Voice Study, October 2014

Second, new technologies are ramping up user need for NO INTERFACE tech – and providing new answers.

Wearables and the Internet of Things are about to change the game.

Think consumers have more information than they can handle now? Wait until multiple household objects are online and sending notifications.

The Internet of Things, wearable devices and beacon technology are about to send a whole new avalanche of notifications, alerts, data, deals and more to the screens of willing consumers. One indication of the what’s to come? Tech analysts forecast that 4.9 billion connected objects will be in use in 2015, up 30% on 2014 (Gartner, November 2014).

But while new technologies are fueling the consumer need for more intuitive interactions with technology, they are also providing new solutions. Yes, there’s the tap on the wrist (will it become the standard NO INTERFACE notification?), but also connected objects that respond to voice, Sat Navs that bring augmented reality to driving, tablets that understand gestures (all below) and much more.

VOICE

There are few interactions faster and more natural than simply talking.

Amazon Echo

Always-on smart digital assistance device

Unveiled in the US during November 2014, Amazon Echo is an always-on, cloud-connected device that can answer spoken questions, searching the internet for answers instantly and speaking its replies. It’s also possible to tell Echo to add products to a shopping list, accessible via a companion app, or play music via Bluetooth integration.

ING

Mobile banking app can be controlled via voice

September 2014 saw Dutch bank ING launch a voice control feature called Inge inside its mobile banking app. Available to Dutch customers, the feature allows users to check their balance, make payments and discover where their nearest ING branch is. ING say future iterations of the app will allow users to log in to the app entirely via voice recognition, dispensing with the need for passwords.

Moto Hint

Bluetooth earbud allows voice control of smartphone

In October 2014 Motorola launched the Moto Hint, a bluetooth-enabled earbud that allows users to control any smartphone via voice commands. Users sync the earbud to their phone, and can then ask questions such as ‘How do I get home?’ to receive answers directly in their ear. They can also make calls, get notifications and send and receive messages. Almost enough to make anyone fall in love with their smartphone – see Spike Jonze’s movie Her for a glimpse of what might be ahead ;)

OneTravel

In-app voice activated travel agent will search for and book flights

In March 2015, OneTravel launched Opal, a voice-activated travel agent inside the online travel booking platform’s mobile app. Users tap the microphone icon to activate Opal, and can then ask the function to conduct searches across more than 450 airlines. Opal will ask for missing search criteria, and use context clues to understand whether a search is for one-way or return flights.

Ubi

Always-on computer brings voice-activation into the home

October 2014 saw the launch of Ubi, a WiFi connected, always-on, voice operated home assistant that allows for hands-free voice interaction. Ubi can be used to send messages to contacts, look up information, play music, and control other smart devices. The device is compatible with a range of other services, such as Nest and SmartThings.

GESTURE

Natural gestures make for a super-intuitive way to interact with devices.

Ring ZERO

Reboot of wearable ring that allows gesture control of smart devices

Demonstrated at SXSW 2015, Ring ZERO is a smart ring that allows the wearer gesture control of almost any smart device. Devices are paired to the Ring, and controlled by drawing designated shapes with the ring-bearing finger, such as an envelope for email. Ring ZERO is a new iteration of the first-generation Ring, launched in 2014, which was criticized for poor usability. Makers Logbar say Ring ZERO improves on gesture recognition accuracy by 300% over its predecessor.

singlecue

Device allows gesture control of connected home

Launched in November 2014, singlecue (formerly known as onecue) is a camera-equipped device that allows the user to control multiple other household devices via gesture. singlecue can sit on a desktop or on top of a television, and can be used to control Apple TV, the Nest Learning Thermostat and Xbox and cable and satellite TV, among others. Gestures can be customized through Android and iOS apps.

MotionSavvy

Tablet case facilitates sign-to-word communication in real-time

Securing Indiegogo funding in December 2014, MotionSavvy is a tablet case using gesture recognition technology to convert sign language into spoken English. The US-developed case is embedded with a Leap Motion controller (a device that tracks hand movements using a camera), and signed words or phrases appear as text on the tablet screen and are also spoken. Every time a user signs, MotionSavvy learns personal style and shortcuts, allowing more personalized text to be displayed.

Microsoft Gestures

App allows gesture control of Windows Phone

In December 2014, Microsoft released Gestures: an app that allows users to control their Windows Phone without touching the phone’s display. Once the app (currently in beta) is downloaded, users can answer a call simply by raising the phone to their ear, mute the phone by placing it face down, switch to speaker by placing the phone face up, or mute an incoming call by flipping the display side of the device down.

TOUCH

This time, touch interactions mean digital information that users can literally feel.

Apple Watch

Smart watch allows 'taptic' vibrating alerts

Apple helped fuel expectations of ultra-natural interactions back in September 2013 when the iPhone 5S launched with Touch ID fingerprint recognition. Now, they’re setting the bar much higher. Released in April 2015, the Apple Watch offers ‘a whole new way to stay in touch’ thanks to its ability to give a vibrating ‘tap on the wrist’ – users can even record and send their heartbeat to one another. Will Apple define yet another device category – and kick the NO INTERFACE trend into the mainstream? The world is watching…

Mercedes-Benz

Vibrating accelerator pedal provides information on driving mode

Announced in September 2014, the S550 Plug-In Hybrid is the first hybrid sedan from Mercedes-Benz. The vehicle includes a haptic accelerator pedal, which uses a series of vibrating pulses to alert the driver when the car is about to shift from battery power to gas. Drivers can then take their foot off the accelerator to remain on battery power.

TACTspace

App and device for sensory messages

Shortlisted for the WT Innovation World Cup in February 2015, US-based TACTspace is intended to allow users to send and receive emotions via physical touch. Via the TACTspace app, users can send messages such as heartbeat vibrations or warmth to a coin-sized vibrating disc, the TACTpuck. The TACTpuck can be worn as a pendant or inside a bracelet or clothing.

Hug

App to send vibrating 'hug' to another smartphone user

Launched in March 2015, Hug is free mobile app that allows users to send ‘the emoji you can feel’ to another smartphone user. To send a hug with the UK-made app, a user holds their phone to their heart, and a vibration for the same length of time as their embrace is sent to their friend or loved one. Hugs can be sent to multiple others at once, and different types of hugs can convey different emotions.

AUGMENTED REALITY

New technologies are seamlessly blending digital information with the physical world

Microsoft HoloLens

AR headset integrates holograms with the physical world

January 2015 saw Microsoft announce HoloLens, an augmented reality headset that allows users to pin holograms to their physical environment. Built on the Windows 10 operating system, the wireless headset allows a user to see digital content such as apps, data, graphics and video, and interact with these as they would other objects in their environment.

Navdy

Display device projects apps onto car windscreens

Unveiled in the US during August 2014, Navdy is an in-car device that projects smartphone information at eye level. The head-display projects a floating image six-feet in front of the driver, who can also use gestures to answer or dismiss calls, and use voice commands such as ‘call mom’ or ‘compose new tweet’.

RideOn

AR goggles for skiers and snowboarders

Closing its funding campaign on Indiegogo in March 2015, RideOn are augmented reality ski goggles that project data and graphics on to the snow in front of the wearer. Users interact with the information by looking at it, and use RideOn to navigate, keep track of their friends, get information on a ski lift’s queue waiting time, and more.